Improving students’ understanding and explanation skills through the use of a knowledge building forum

Research in education has put forward the importance of helping students develop deep comprehension of explored subjects in order for them to be better prepared for tomorrow’s knowledge society (Bereiter, 2002; Bransford, Brown & Cocking, 2000; Drucker, 1993; United Nations, Educational, Scientific and Cultural Organization [UNESCO], 2008). Students are too often asked to repeat what they know rather than to explain and connect their knowledge with one another (Sawyer, 2006; Wiske, 1998a). Therefore, the challenge for educational reform is to design learning environments that are more centered on student learning and that will allow students to reach a deeper level of understanding.

Technology integration takes many shapes and forms, and initiatives abound that are aimed at preparing learners for life in a society evolving rapidly because of easier access to information and a continuous availability of communication media (Bielaczyc, 2006; Bielaczyc & Collins, 2006; Collins & Halverson, 2009; Partnership for 21 Century Skills, 2008; Perkins, Crismond, Simmons & Unger, 1995). Whether it is microblogging, regular blogging, or other forms of social networking, we understand that teachers use these tools as a mean of developing technology literacy, and sometimes, information literacy, which may be defined as the capacities to interact with information (Markauskaite, 2006). Young people need to be creative and innovative in order to thrive in the 21st century, and the use of technology in schools is often considered an effective manner of developing these skills, particularly because of student interest in communicating through technology as well as the limitless possibilities for their application (Reigeluth & Carr-Chellman, 2009; Wiske, Franz, & Breit, 2005).

Morroco (2001), who studied long-term teacher professional development projects at the Education Development Center in the USA, identifies four principles to design better learning environments based on deepening student understanding: 1) Authentic tasks to fully engage students in their learning and develop a deeper understanding of the content; 2) Opportunities to build cognitive strategies; 3) Learning that is socially mediated so students can interact to build and integrate knowledge; and 4) Engaging in constructive conversation so students can express and then integrate their own ideas and questions.

To develop better student comprehension skills, explanation-driven rather than fact-driven teaching practices have proven to be more effective (Bransford et al., 2000; Coleman, 1998; Hakkarainen, 2003, 2004; Hatano & Inagaki, 1987; Lipponen, 2000; Meyer & Woodruff, 1997; Roth, McGinn, Woszczyna, & Boutonné, 1999). Certain technological tools, such as Knowledge Forum, have been shown to support explanation-seeking pedagogies as well as the inquiry process (Bereiter & Scardamalia, 1996; Brown, Ellery, & Campione, 1998; Hakkarainen, 2003). More specifically, explanation-seeking pedagogies have been proven to be very efficient in science education (Coleman, 1998; Hatano & Inagaki, 1987; Krajcik, Soloway, Blumenfeld, & Marx, 1998; Roth et al., 1999).

In light of this, how can the use of technology in the classroom improve students’ explanation skills during a classroom-based discussion (e.g., to provide an information, an opinion, supportive evidence; to make an inference, to point to a cause; to generate a new question; to offer a better explanation regarding a problem), thus permitting them to attain the essential learning outcomes of the elementary or secondary curriculum? We believe that one way for this to occur is through a technological tool that can allow scaffolding of student inquiry focused on knowledge building rather than knowledge telling (Bereiter, 2002), designed to support the collaborative investigation of authentic problems (Chin & Osborne, 2010; Scardamalia, 2006). Knowledge building involves idea or explanation improvement whereas knowledge telling entails writing what one knows, especially facts. In this regard, the collaborative nature of learning as well as the support of the community of learners (Brown & Campione, 1994) are also important aspects to consider in the design of the learning environment (Rummel & Spada, 2005). Indeed, we now know that students can learn by interacting with technology and with their peers (Cakir, Zemel, & Stahl, 2009; Kreijns, Kirschner, & Vermeulen, 2013). The use of technology in a Web 2.0 world reminds us that we are not alone in front of our computer screens; schools can profit from this connectedness in order to enrich the learning environment of their students. It is therefore important to consider whether we should require students to power down their personal technological devices as they enter into the classroom, a restriction likely to contrast with what they experience during their everyday life, or whether they should instead be invited to use them for specific learning activities.

Classroom-based, student-centered, telecollaborative environments can provide opportunities for students to engage in the knowledge construction process (Engle & Conant, 2002; Zhang, Scardamalia, Reeve, & Messina, 2009). Online collaborative learning also calls for a significantly different teacher role, collaboration (Harasim, 2011; Roschelle, Bakia, Toyama, & Patton, 2011), and an emphasis on student progressive discourse, that is, a classroom discussion devoted to the improvement of explanations (Bereiter, 1994). The students in these types of learning environments are not only active learners, but together, they become knowledge creators when using knowledge to resolve problems and be innovative (Bereiter & Scardamalia, 2006, 2010). The use of technology and the study of its contribution to verbal and written discourse also show that some tools have a great potential for supporting classroom conversation (Hewitt, 2002; Laferrière, Erickson, & Breuleux, 2007; Sawyer, 2006; Zhao & Rop, 2001). What students write on screen can add value to the work accomplished in the classroom (Barron, 2003; Rummel & Spada, 2005; White & Pea, 2011), in addition to motivating them to engage in classroom learning activities (British Educational Communications and Technology Agency, 2001; Cox, 1997; Passey, 2001; Sharples, Graber, Harrison, & Logan, 2009). In this paper, we will present the context within which such online writing took place and the methodology used to analyze the quality of their collaborative writing and its outcomes.

The Context of the Study

The Remote Networked Schools (RNS) initiative in the province of Quebec (Canada) aimed to enrich the learning environment of small rural francophone schools by providing students and teachers with more opportunities for interaction through the use of telecollaborative technologies. Given a substantial decline in population from rural exodus, these rural schools have faced many issues such as a lack of specialized resources for students, multilevel classrooms, small numbers of registered students, and professional isolation. In 2010, 23 school districts, more than 200 schools, 170 teachers, and over 2,000 students were involved in this initiative. Since 2002, two telecollaborative tools were made available to the participating classrooms: an easy-to-use desktop videoconferencing tool (iVisit) and Knowledge Forum (KF), a discussion forum based on the theory of knowledge building (Scardamalia, 2004). In this paper, we focus on the effectiveness of the latter tool, KF.

Hundreds of classes involved in the RNS initiative have worked collaboratively online when doing learning activities and projects, at a frequency ranging from once a month to once per trimester or per year, anchored in the Quebec school curriculum. Over the years, professional development was offered online on-demand and 7-10 scheduled two-hour sessions were offered to teachers regarding authentic and open questioning and collective knowledge building as well as student-centred learning environments. The initiative provided skilled human resources that were available for real-time online support all day long regarding the planning of learning activities, reflection on the progress of specific collaborative activities, and setting of goals for improving student writing and knowledge-building ability (Hamel, Allaire, & Turcotte, 2012).

After six years of implementation, the use of the telecollaborative tools became an integral part of RNS classroom practices, comprising approximately one third of classroom time. Impact measurement of the initiative included indicators such as student motivation, development of innovative practices, and organizational changes (Laferrière et al., 2011). Some teachers had reported that students who were more active on KF were more successful and further developed in their explanation skills than less active KF users. This was the impetus for the study presented here, i.e. to focus on the use of KF and to confirm (or not) its perceived impact on student learning.

We were also aware of an increase in student motivation regarding writing (Laferrière et al., 2011). Teachers were reporting evidence of a real-audience effect, that is, students reading other students’ work, especially regarding science and technology activities supported by KF (Laferrière et al., 2011). There was some evidence of students improving their ideas and their explanations (knowledge building); however, teachers wanted to validate their perceptions. Were individual competencies of students being developed as a result of involvement in these online collaborative activities? Were students individually able to explain what they had collectively discussed in KF? Were they able to apply the shared knowledge in other contexts? In order to answer these questions, we set up a mixed methods study with schools.

This paper presents the results of two different discourse analyses that were applied to student oral (pre- and post-) interviews and written (KF) discourse; the results obtained were quantified. We then triangulated the quantified qualitative data with quantitative data related to student KF use. Conducted with volunteer teachers from four RNS school districts, this study allowed us to closely observe the use of KF on student learning, with a specific focus on their explanation skills.

The research questions addressed in this study were the following:

- • Can the use of the knowledge building tool contribute to the development of students’ explanation skills?

- • Can distinct levels of KF use lead to different explanation skills’ magnitude of change?

Methodology

Participants

A total of 186 students (101 girls and 85 boys) of the RNS initiative in the province of Quebec participated in the study. The academic level of the students ranged from grades 3 to 6. The participants were recruited from 19 different classrooms and four school boards. Among these classes, some were located in socio-culturally and -economically disadvantaged areas. Likewise, some schools struggled with high dropout rates and serious academic motivational problems.

Material

The curriculum for primary education in Quebec includes five areas of study: 1) languages (French and English), 2) mathematics, science, and technology, 3) social sciences, 4) arts, and 5) personal development. The curriculum is based on the development of competencies (three per area of study), and disciplinary core ideas (essential knowledge). These core ideas guided the development of the questionnaires used with the students in this present study. Most of the teachers used authentic questioning strategies in the forum to foster students’ understanding (Wiske, 1998a, 1998b) of various phenomena linked to the curriculum (Laferrière et al., 2011). Thus, without precisely knowing in advance the questions that would be the studied by the students and their teacher, we knew what was the area of the intended learning, skills, as well as the disciplinary core ideas targeted.

The telecollaborative environment used by students was KF, which provides applets to analyze the students’ usage of the forum (e.g. the number of written and read contributions, the use of scaffolds, keywords, etc.). An applet is a tool to monitor the activity in the forum. Specifically, the present study used the number of written and read contributions as the dependent variables associated with the students’ use of KF.

To evaluate the depth of explanation associated with the students’ contributions in KF, each written input was coded on a five-level scale from isolated facts to complete explanations. Based on two existing grids (i.e. Chan & van Aalst, 2008; Hakkareinen, 2003), a new analysis grid that better fit our context was developed (see Table 1). The interrater agreement obtained with three coders was of 89%, which is considered a very good level of reliability (Miles & Huberman, 1999).1 Each contribution was coded and then scored regarding its depth of explanation, and a mean score was obtained for each student. This mean explanation score served as a dependent variable.

Table 1. Analysis grid of the depth of explanation in the students’ written discourse on KF

|

Depth of explanation |

Definitions |

Examples from an Ethic and Religious Cultures topic |

|

1 |

Gives his/her opinion without providing facts, evidence, or elaboration. Repeats an already mentioned fact. Mentions facts or enumerates facts. |

The priest speaks about Jesus. |

|

2 |

Names and describes factual information (general descriptions). Organizes facts very briefly (descriptive) without making clear links to the question. Is able to use examples and connectors. |

We found a temple in Montreal called Dao and it was built in 1957. |

|

3 |

Makes inferences supported by facts. Partially explains (limited explanations or partially articulated constructions) beyond the simple description or information. Possible relevant answer to the inquiry question. |

There are many because there are more and more people who want to pray in the world. |

|

4 |

Makes claims supported by explanations, evidence and/or relevant examples. Structure is clear, articulate, is based on an intuitive explanation or introduces a scientific explanation. |

Because there are different people who have different beliefs than us, this is why we do not have the same place to pray and there are more places of worship. |

The quality of explanation was also assessed through pre- and post-activity interviews. These took the form of oral activities that required students to explain the topic they were going to explore (or had explored) in class. For example, students working on the different climates were asked: Are the alternating seasons the same everywhere in the world? Why or why not?

The students’ responses to the interviews were analyzed qualitatively for the quality of the explanation provided (McNeill, Lizotte, Krajcik, & Marx, 2006; Turcotte, 2008). Table 2 presents the coding rubric used in this analysis. Interrater agreement was achieved between three coders to ensure the validity of the coding (Miles & Huberman, 1999), with an average rate of 93% for the three learning domains (science, social science, and personal development). This qualitative analysis allowed us to generate quantitative results reflecting the quality of explanation given by each of the interviewed students.

Table 2. Rubric for the pre- and post-activity interviews

|

Score |

Rubric |

Definition |

Examples |

|

0 |

No explanation |

No answer; Incoherent, incomplete, and incorrect explanation |

I really don’t know.

|

|

1 |

Partial explanation |

Incomplete but correct explanation; Complete but partially incorrect explanation |

It’s like fertilizer such as plants. |

|

2 |

Complete explanation |

Complete and correct explanation |

Composting is putting an orange peel, for example in a tank instead of putting it in the trash, it will decompose and we will be able to use as fertilizer after. |

NOTE. The examples are based on the question: What is composting? (essential knowledge, grade 3 and 4, 8-9 years old).

Procedure

At the beginning of the project, the teachers informed us of their pedagogical intentions in relation to the curriculum. They were not aware of the interview questions or the results until after the post-interviews were conducted. In order to answer our research questions, pre- and post-activity individual interviews were conducted with the students to evaluate the students’ quality of explanation of certain phenomena related to the subject addressed in KF. Between the pre- and post-activity interviews, the students worked in KF for approximately two to four weeks. The post-activity interviews were conducted up to two weeks after the completion of the learning activities on KF. If, for some reason, an entire classroom could not be interviewed, then the teachers were asked to select nine of their students for the interviews: three students at three different levels of ability. The teacher selection allowed us to avoid interviewing only the stronger students. However, the interviewers were deliberately not aware of the students’ level of ability throughout the experiment.

Finally, three classrooms did not work on KF during that time period because their teacher encountered technical and time-related problems. Since their students were interviewed before and after their activity, we decided to use these classes as a control group.

Results

Group formation

The first step was to form groups based on the students’ use of KF. The work done by the students in KF was analyzed and large differences were found in the use of the forum, especially regarding the number of written and read contributions. These observations suggested the presence of different user profiles in the sample, or active and less active KF users in terms of notes written and notes read. In order to confirm this assumption, a cluster analysis was conducted on the experimental sample, i.e. students who had used KF between the pre- and post-activity interviews. Results of a hierarchical cluster analysis (using the Ward Method and the Squared Euclidean distance measure), with the number of read and written contributions as the dependent variables, suggested that the experimental group could be partitioned into four groups (Burns & Burns, 2008). All grades were represented in each group.

KF use comparison across groups

The second step consisted in comparing KF use according to the previously formed groups and sex. As the numbers of written and read contributions distributions were positively skewed, a non-parametric approach was used to assess the possible differences between groups.2 Thus, following Finch’s (2005) recommendations, a nonparametric MANOVA 4 (groups: Group 1 vs. Group 2 vs. Group 3 vs. Group 4) x 2 (sex: Girls vs. Boys) was conducted on the number of written and read contributions. Using Pillai’s trace, the results revealed a significant group effect, F(6, 318) = 70.76, p < .001, ŋp2 = .57. Results of Roy-Bargman Stepdown F tests suggested that both variables contributed significantly to the group effect (both ps < .001). No other main or interaction effects were significant (all ps > .05).

In order to locate the differences between the groups, we conducted a series of Mann-Whitney tests. Type I error inflation was controlled by using an alpha level of .008 (i.e. .05/6 planned comparisons). The results revealed that almost all groups differed significantly on the number of written and read contributions (all ps < .008). The only exception was that the number of read contributions did not differ significantly between Groups 3 and 4. Table 3 presents the means and the standard deviations of the written and read contributions according to group.

Table 3. Means and standard deviations of the written and read contributions for each group

|

Groups |

n |

Written contributions |

Read contributions |

||

|

M |

SD |

M |

SD |

||

|

Group 1 |

57 |

3.98 |

2.01 |

34.61 |

28.30 |

|

Group 2 |

36 |

15.29 |

7.24 |

125.57 |

49.16 |

|

Group 3 |

41 |

0.56 |

0.50 |

7.49 |

7.14 |

|

Group 4 |

33 |

2.58 |

0.97 |

7.00 |

4.28 |

|

Control |

19 |

- |

- |

- |

- |

Quality of explanation across groups, sex, and test time

The third step was designed to compare the quality of explanation according to group and sex. As the pre- and post-activity measures comparison across groups might have conveyed a regression to the mean effect (Nielson, Karpatschof, & Kreiner, 2007),3 the post-activity scores were corrected by following Nielson et al.’s (2007) recommendations.

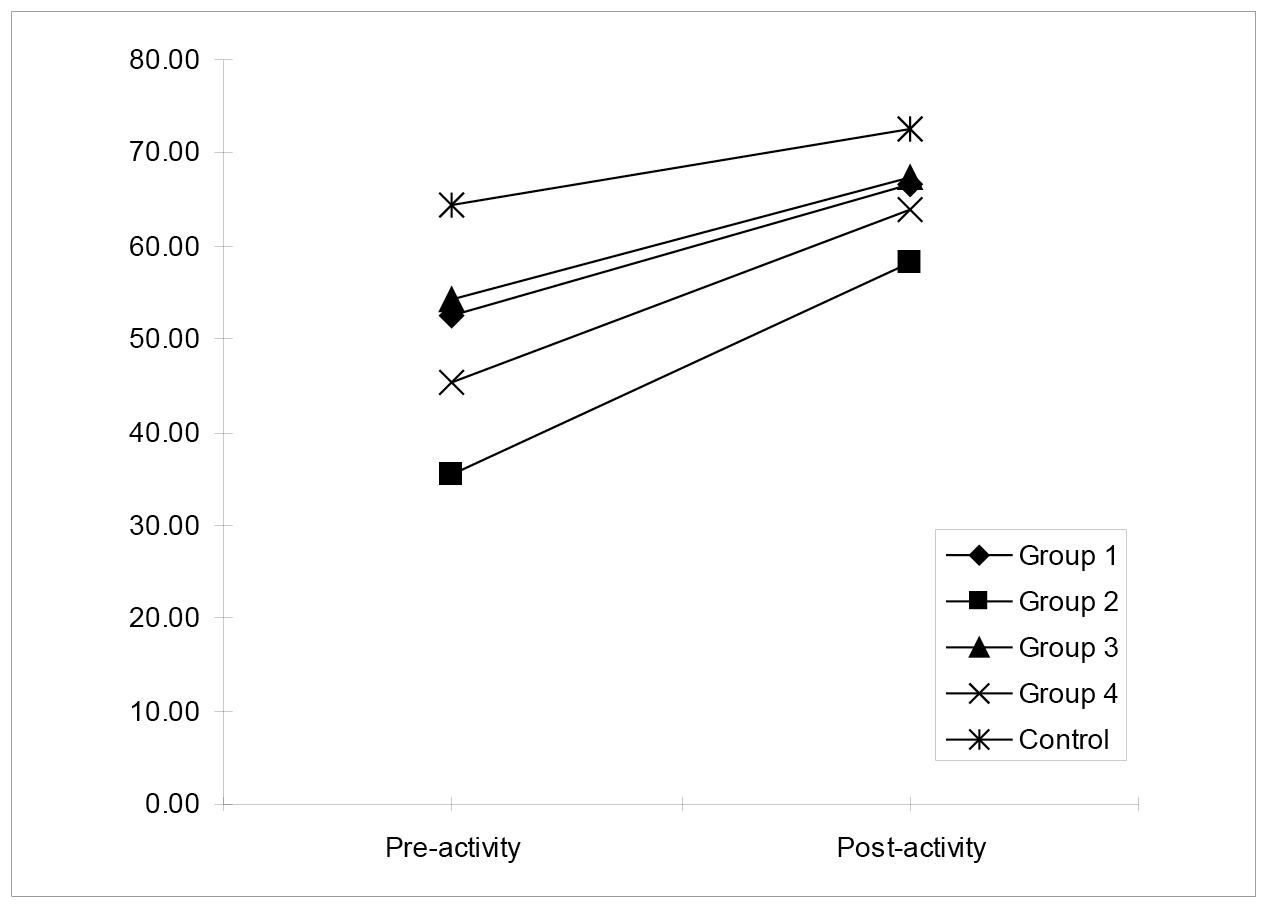

A repeated measures ANOVA 5 (groups: Group 1 vs. Group 2 vs. Group 3 vs. Group 4 vs. Control) x 2 (sex: Girls vs. Boys) x 2 (test time: pre- vs. post-activity) was conducted in order to verify if the quality of explanation differed according to these factors. The results revealed that the quality of explanation was significantly higher for the post-activity as compared to the pre-activity, F(1, 176) = 252.69, p < .001, ŋp2 = .59. Furthermore, there was a significant interaction between the test time and group factors, F(4, 176) = 6.18, p < .001, ŋp2 = .12. No other main or interaction effects were significant. Figure 1 presents the quality of explanation’s mean according to group and test time.

In order to clarify the interaction, multiple analyses were conducted. Results of an ANOVA 5 (groups: Group 1 vs. Group 2 vs. Group 3 vs. Group 4 vs. Control) conducted on the pre-activity quality of explanation scores revealed that there was at least one significant difference between groups: F(4, 181) = 9.32, p < .001, ŋp2 = .17. Pairwise comparisons (with Bonferroni correction) revealed that compared to Group 2, the pre-activity quality of explanation scores were significantly higher for Groups 1 and 3 as well as for the control group. Likewise, the control group had significantly higher pre-activity quality of explanation scores compared to Group 4. No other comparison yielded significant differences.

Results of an ANOVA 5 (groups: Group 1 vs. Group 2 vs. Group 3 vs. Group 4 vs. Control) conducted on the corrected post-activity quality of explanation scores revealed that there was no significant difference between groups, F(4, 181) = 1.59, p > .05, ŋp2 = .03.

Figure 1. Mean of the pre- and corrected post-activity scores according to group.

Quality of explanation’s magnitude of change across groups and sex

The fourth step aimed at comparing the quality of explanation’s magnitude of change according to group and sex. As was the case with the previous analyses, the magnitude of change was controlled for differences on the pre-activity’s scores. Thus, an ANOVA 5 (groups: Group 1 vs. Group 2 vs. Group 3 vs. Group 4 vs. Control) x 2 (sex: Girls vs. Boys), with the pre-activity measure included as a covariate, was conducted. The results revealed a significant covariate effect, F(1, 175) = 54.56, p < .001, ŋp2 = .24. Similarly, there was a significant group effect, F(4, 175) = 4.60, p < .05, ŋp2 = .10. No other main or interaction effects were significant. Pairwise comparisons (with Bonferroni correction) revealed that the magnitude of change of Group 2 was significantly higher than those of Groups 1 and 3, as well as those of the control group. Table 4 presents the mean and standard deviation of the magnitude of change for each group.

Table 4. Mean and standard deviation of the magnitude of change for each group

|

Groups |

M |

SD |

|

Group 1 |

13.29 |

11.27 |

|

Group 2 |

27.67 |

15.40 |

|

Group 3 |

11.58 |

11.88 |

|

Group 4 |

18.79 |

18.52 |

|

Control |

3.39 |

12.07 |

NOTE. Albeit the large standard deviations, the Levene’s test of Equality of Error Variances was non-significant.

Results from Pearson correlations indicated that the magnitude of change was significantly related to both the number of read (r = .35, p < .001) and written (r = .40, p < .001) contributions. Nevertheless, results from a multiple regression revealed that the number of written contributions was the only significant predictor of the magnitude of change (r2 = .16, p < .001).

Depth of explanation comparison across groups and sex

The fifth and last step intended to compare the depth of explanation associated with the students’ written contributions in KF according to groups and sex. As the variance differed significantly across groups, a non-parametric approach was used.

First, results from a Mann-Whitney test revealed that the depth of explanation was not significantly higher for girls (M = 2.58, SD = 0.81) compared to boys (M = 2.56, SD = 0.83).

Secondly, results from a Kruskal-Wallis test revealed that there was at least one significant difference between the four experimental groups, (p < .05). With an alpha level of .008 (.05/6 planned comparisons), results from Mann-Whitney tests showed that Group 2’s depth of explanation scores were significantly lower than those of Groups 1, 3 and 4 (all ps < .008). Table 5 presents the mean and standard deviation of the depth of explanation for each group.

Table 5. Mean and standard deviation of the depth of explanation for each group

|

Groups |

M |

SD |

|

Group 1 |

2.52 |

0.83 |

|

Group 2 |

2.09 |

0.30 |

|

Group 3 |

2.78 |

0.80 |

|

Group 4 |

2.82 |

0.88 |

Discussion

The aim of the study was to validate the teachers’ perception that students who were active users on KF were learning more, and further developing their explanation skills. More precisely, the objectives were to: (a) verify if and how the use of the knowledge building tool contributed to the development of the students’ explanation skills, (b) explore whether the quality of explanation’s magnitude of change differed depending on different KF user profiles. It also hoped to confirm (or not) the researchers’ belief that a collaborative knowledge building tool such as KF would not only enrich the learning environment of the students, but that such collective efforts would lead to better individual student learning.

First, the results revealed that there were four different groups in the sample depending on the number of written and read notes in the forum. The identified groups thus reflected differing levels of student activity in the KF: Group 1 was moderately active on the KF (M =3.98 written notes / student; M = 34.61 read notes / student), Group 2 was very active (M = 15.29 written notes / student; M = 125.57 read notes / student), Group 3 was less active on the KF (M = 0.56 written notes / student; M = 7.79 read notes / student), and Group 4 was moderately active regarding the number of notes written (M = 2.58 notes / student) but less active regarding the number of notes read (M = 7.00 notes / student). Of relevance for the following discussion is the fact that Group 2 had a significantly higher number of written and read contributions compared to the other three groups. Also, their mean depth of explanation in the KF was significantly lower than the means of the other groups.

Secondly, the analyses showed that the quality of explanation differed across groups for the pre-activity interview. Among others, Group 2 had a significantly lower quality of explanation mean compared to the other groups. After controlling for a regression to the mean effect, the quality of explanation across groups did not differ significantly for the post-activity interview. Also, the magnitude of change between the pre- and post-activity was significantly higher for Group 2 compared to the other groups, even after controlling for differences on the pre-activity measure. Additionally, the number of written and read contributions was significantly associated with the magnitude of change. After further analysis, only the number of written contributions efficiently predicted the magnitude of change. Finally, analyses showed that none of the dependent variables differed according to sex.

Altogether, these results suggest that the number of written contributions can predict a portion of the quality of explanation’s magnitude of change. Indeed, the results show that a greater usage of KF (a) favoured a greater magnitude of change and (b) enabled students with lower quality of explanation means to close the gap with students with higher quality explanation skills. However, these assumptions only apply when the quality of explanation is evaluated orally and individually. In fact, when the quality of the written contributions in KF is examined, Group 2 had a significantly lower depth of explanation mean compared to the other groups. It would thus seems to be important to encourage students to not simply write a large number of contributions in the forum, but to write contributions that will improve their understanding of the question. Quantity does not guarantee quality.

Concerning oral explanation skills, Group 2 was significantly ahead of the other groups, although despite their very active participation in the forum, they presented less impressive results. However, their explanation skills increased the most between the two tests.

This study relies on a solid design linking repeated student interviews, on the one hand, and collaborative writing, on the other. The number of cases was also impressive: interviews with nearly 300 students from different schools and schools districts all over the province were conducted and then analyzed. A cluster analysis confirmed the researchers’ observations in the field regarding KF users and this allowed us to formally create user profiles, a difficult task in itself considering the tremendous number of variables in our particular research context. The mixed design allowed us to benefit from the strengths of both methodologies: the qualitative analysis of the students’ collaborative online discourse while individual pre- and post-activity interviews gave us a better insight on student learning and explanation capacity. Quantitative analyses allowed us to generate clear answers to our research questions. These analyses, combined with the teachers’ perceptions, have allowed us to benefit from a triangulation of methodologies that further validates our findings.

Indeed, our results show that infrequent users improved as much as non-users, and this confirms that in order to get results, the use of the tool must reach a certain level. It is not uncommon for teachers to want to “start small” with this kind of innovation. For example, they will choose to use KF to prepare activities for Christmas or Halloween, hoping that if it “doesn’t work,” at least it will not interfere with more “serious learning.” Our own intervention approach suggested working on central parts of the curriculum rather than peripheral ones. We believed that in doing so, students as well as teachers would benefit from the activity faster, but that it would also be the best way to make the most of the time spent using the tool. No school time is worth wasting.

As we expected, writing one or two contributions once in a while is not sufficient enough for students to improve their explanation skills. When we looked more closely, Group 2 students mostly came from two groups whose teachers incorporated networking activities in their daily routine for up to one hour each day. In these two groups, students spent as long as one hour every day reading, writing, and collaborating on KF on various school topics and authentic questions. The teachers also mentioned that with KF, they participate more than other groups in authentic reading and writing activities (Laferrière et al., 2011). Clearly, active use of KF leads to greater improvement of student explanation skills, at least orally. But there is a need to reach a certain level of activity while maintaining focus on quality writing.

The fact that some groups, just like the control group, showed less progress in students’ explanation skills, raises a new series of questions: Is effective classroom collective inquiry bound to the use of KF or another similar online collaborative space? Does this mean that the investigations that were essentially of a verbal nature had less of an impact on students’ explanation skills? Reading and writing in a greater proportion may have developed the students’ ability to make connections between concepts and knowledge (Bereiter, 2002). Students may have interacted more among themselves in a written form rather than a verbal form (Cazden, 1988). However, we doubt that teachers were as rigorous in respecting the structure of the inquiry process when they relied only on verbal classroom interaction (and other forms of exercise from the textbooks). For example, one limitation of this study is that we have no data on the structure of classroom verbal discourse. Did it fall back to the standard I-R-E (Initiation-Response-Evaluation) structure (Cazden, 1988)?

Could KF affordances, emphasizing explanation and authentic questioning, be more challenging for students than other more standard classroom practices? In other RNS studies, students expressed their motivation to work with the technological tools, as these tools gave them access to other students’ ideas (Laferrière et al., 2011). The use of technology to enable students to improve their ability to explain and better understand ideas may only have an impact if such use is supported by an appropriate pedagogy. The quality of the teacher’s discourse is critical: it must lead students to ask authentic questions. Moreover, students must have the space and time for inquiry. Our study therefore shows that the combination of active student participation mediated by KF technology and a collaborative inquiry approach produced significant results for students. Other studies have arrived at related findings (Chin & Osborne, 2010; Pea, 2004; Turcotte, 2008; Zhang et al., 2006).

The results of this paper were presented to the participating teachers as well as to the entire RNS network. Emphasis was placed on the need for students to work with KF at a certain frequency before seeing definite results in student learning. There was a general acknowledgement that the major challenge for the RNS network remains, that is, to significantly increase the time spent using KF so that students may have more opportunities to experience knowledge building / creation (Scardamalia & Bereiter, 2008).

Given that Quebec’s professional educators are currently working to enhance the graduation rate of high school students and that there is much evidence that reading and writing are critical to student learning (Catel, 2001), the results of our study are of special relevance. They demonstrate that when students are in a stimulating, innovative environment that allows them to work on real ideas and complex problems, they are able to achieve more. Moreover, when an authentic audience is present (Allington & Cunningham, 1996; Atwell, 2002; Graves, 1991; Rijlaarsdam et al., 2008), one coming from a completely different environment, for example, Barcelona or Hong Kong (Laferrière et al., 2010), students’ interest is stimulated and the quality of their explanation skills can significantly improve.

Conclusion

This study provides empirical evidence that the informed and sustained use of a knowledge building tool to support student collaborative learning was able to significantly improve student explanation skills. However, a less frequent use of KF was not linked to such improvement, confirming that a certain level of online activity is necessary in order to achieve such results. Indeed, a minimal use of the tool such as described in Table 3 has generated as much improvement as the control group, which did not use the tool at all. This confirms our belief that in order to get significant results, teachers must focus on central elements of the curriculum and have their students read and write to a certain degree. Minimal efforts will generate minimal to no results.

This study also provided empirical evidence that explanation-based, rather than fact-based, online collaborative discourse led to greater improvement between pre- and post-activity interviews, even for students who had significantly lower results at the onset; this being the case even when most of these active students come from low socioeconomic status communities.

For the last ten years, the RNS initiative has aimed to enrich the learning environment of the students. These results show that frequent and quality interactions supported by Knowledge Forum can lead to the improvement of students’ explanation skills and to deeper understanding. The RNS initiative also shows how emerging technologies and globalization enable universities to develop new ways of supporting professional development in schools and other fields.

NOTES

- 1. The number of agreements (A) divided by the number of agreements plus disagreements (D) (A / (A + D)).

- 2. The application of a log transformation on both variables was tested. Eventually, this solution was discarded because it had no significant impact on the variables’ distribution.

- 3. There was a negative and significant correlation between the pre-activity scores and the magnitude of change between the pre- and post-activity scores (r = -.57, p < .001). In other words, the higher the pre-activity score was, the lower the magnitude of change between the pre- and post-activity measures was. Thus, this result confirmed the presence of a regression to the mean effect.

References

Allington, R. L., & Cunningham, P. M. (1996). Schools that work: Where all children read and write. New York, NY: HarperCollins College.

Atwell, N. (2002). Lessons that change writers. Portsmouth, NH: Heineman.

Barron, B. (2003). When smart groups fail. The Journal of the Learning Sciences, 12, 307-359.

British Educational Communications and Technology Agency (BECTA). (2001). The digital divide: A discussion paper. Coventry, United Kingdom: British Educational Communications and Technology Agency.

Bereiter, C. (1994). Implications of postmodernism for science, or, science as progressive discourse. Educational Psychologist, 29(1), 3-12.

Bereiter, C. (2002). Education and mind in the knowledge age. Mahwah, NJ: Lawrence Erlbaum.

Bereiter, C., & Scardamalia, M. (1996). Rethinking learning. In D. R. Olson & N. Torrance (Eds.), The handbook of education and human development: New models of learning, teaching and schooling (pp. 485-513). Malden, MA: Blackwell.

Bereiter, C., & Scardamalia, M. (2006). Education for the knowledge age: Design-centered models of teaching and instruction. In P. A. Alexander & P. H. Winne (Eds.), Handbook of educational psychology (pp. 695-713). Mahwah, NJ: Lawrence Erlbaum.

Bereiter, C., & Scardamalia, M. (2010). Can children really create knowledge? Canadian Journal of Learning and Technology 36(1). Retrieved from http://www.cjlt.ca/index.php/cjlt/article/view/585

Bielaczyc, K. (2006). Designing social infrastructure: Critical issues in creating learning environments with technology. Journal of the Learning Sciences, 15(3), 301-329.

Bielaczyc, K., & Collins, A. (2006). Technology as a catalyst for fostering knowledge-creating communities. In A. M. O’Donnell, C. E. Hmelo-Silver, & G. Erkens (Eds.), Collaborative learning, reasoning, and technology (pp. 37-60). Mahwah, NJ: Lawrence Erlbaum.

Bransford, J. D., Brown, A. L., & Cocking, R. R. (Eds.). (2000). How people learn: Brain, mind, experience, and school. Expanded edition. Washington, DC: National Academy Press.

Brown, A. L., & Campione, J. C. (1994). Guided discovery in a community of learners. In K. McGilley (Ed.), Classroom lessons: Integrating cognitive theory and classroom practice (pp. 229–270). Cambridge, MA: MIT Press / Bradford Books.

Brown, A., Ellery, S., & Campione, J. (1998). Creating zones of proximal development electronically. In J. G. Greeno & S. Goldman (Eds.), Thinking practices in mathematics and science learning (pp. 341–367). Mahwah, NJ: Lawrence Erlbaum.

Burns, R. P., & Burns, R. (2008). Business research methods and statistics using SPSS. London, United Kingdom: Sage.

Cakir, M. P., Zemel, A., & Stahl, G. (2009). The joint organization of interaction within a multimodal CSCL medium. International Journal of Computer-Supported Collaborative Learning, 4(2), 115-149.

Catel, L. (2001). Ecrire pour apprendre ? Ecrire pour comprendre ? Etat de la question. Aster, 33, 3-16.

Cazden, C. B. (1988). Classroom discourse: The language of teaching and learning. Portsmouth, NH: Heinemann.

Chan, C. K. K., & van Aalst, J. (2008). Collaborative inquiry and knowledge building in networked multimedia environments. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. 299-316). Dordrecht, Netherlands: Springer.

Chin, C., & Osborne, J. (2010). Supporting argumentation through students’ questions: Case studies in science classrooms. Journal of the Learning Sciences, 19(2), 230-284.

Coleman, E. B. (1998). Using explanatory knowledge during collaborative problem solving in science. Journal of the Learning Sciences, 7(3/4), 387-427.

Collins, A. & Halverson, R. (2009). Rethinking education in the age of technology: The digital revolution and schooling in America. New York, NY: Teachers College Press.

Cox, M. J. (1997). The effects of information technology on students’ motivation: Final Report. Conventry, United Kingdom: National Council for Educational Technology.

Drucker, P. F. (1993). Postcapitalist society. New York, NY: HarperCollins.

Engle, R. A., & Conant, F. C. (2002). Guiding principles for fostering productive disciplinary engagement: Explaining an emergent argument in a community of learners classroom. Cognition and Instruction, 20(4), 399-483.

Finch, H. (2005) Comparison of the performance of nonparametric and parametric MANOVA activity statistics when assumptions are violated. Methodology, 1(1), 27-38.

Graves, D. H. (1991). Build a literate classroom. Portsmouth, NH: Heinemann.

Hakkarainen, K. (2003). Progressive inquiry in a computer-supported biology class. Journal of Research in Science Teaching, 40(10), 1072-1088.

Hakkarainen, K. (2004). Pursuit of explanation within a computer-supported classroom. International Journal of Science Education, 26(8), 979-996.

Hamel, C., Allaire, S., & Turcotte, S. (2012). Just-in-time online professional development activities for an innovation in small rural schools. Canadian Journal of Learning and Technology / La Revue Canadienne de L’apprentissage et de La Technologie, 38(3).

Harasim, L. (2011). Learning theory and online technology: How new technologies are transforming learning opportunities. New York, NY: Routledge.

Hatano, G., & Inagaki, K. (1987). A theory of motivation for comprehension and its application to mathematics instruction. In T. A. Romberg & D. M. Steward (Eds.), The monitoring of school mathematics: Background papers: Vol. 2. Implications from psychology, outcomes of instruction (pp. 27-66). Madison, WI: Wisconsin Center for Educational Research.

Hewitt, J. (2002). From a focus on task to a focus on understanding: The cultural transformation of a Toronto classroom. In T. Koschmann, R. Hall, & N. Miyake (Eds.), CSCL2: Carrying forward the conversation (pp. 11-41). Mahwah, NJ: Lawrence Erlbaum Associates.

Kreijns, K., Kirschner, P. A., & Vermeulen, M. (2013). Social aspects of CSCL environments: A research framework. Educational Psychologist, 48(4), 229–242.

Krajcik, J., Soloway, E., Blumenfeld, P., & Marx, R. (1998). Scaffolded technology tools to promote teaching and learning in science. In C. Dede (Ed.), ASCD 1998 yearbook: Learning with technology (pp. 31-45). Alexandria, VA: Association for Supervision and Curriculum Development.

Laferrière, T., Erickson, G., & Breuleux, A. (2007). Innovative models of web-supported university-school partnerships. Canadian Journal of Education, 30(1), 211–238.

Laferrière, T., Montane, M., Gros, B., Alvarez, I., Bernaus, M., Breuleux, A.,… Lamon, M. (2010). Partnerships for knowledge building: An emerging model. Canadian Journal of Learning Technologies, 36(1), 1-20. Retrieved from http://www.cjlt.ca/index.php/cjlt/article/download/578/280

Laferrière, T., Hamel, C., Allaire, S., Turcotte, S., Breuleux, A., Beaudoin, J., & Gaudreault-Perron, J. (2011). L’École éloignée en réseau (ÉÉR), un modèle. Québec, QC : CEFRIO.

Lipponen, L. (2000). Towards knowledge building discourse: From facts to explanations in primary students’ computer mediated discourse. Learning Environments Research, 3, 179-199.

Markauskaite, L. (2006). Towards an integrated analytical framework of information and communications technology literacy: From intended to implemented and achieved dimensions. Information Research, 11(3). Retrieved from http://InformationR.net/ir/11-3/paper252.html

McNeill, K. L., Lizotte, D. J., Krajcik, J., & Marx, R. W. (2006). Supporting students’ construction of scientific explanations by fading scaffolds in instructional materials. The Journal of the Learning Sciences, 15(2), 153-191.

Miles, M. B., & Huberman, A. M. (1999). Qualitative data analysis (3rd ed.). Thousand Oaks, CA: Sage.

Meyer, K., & Woodruff, E. (1997). Consensually driven explanation in science teaching. Science Education, 81, 173-192.

Morocco, C. C. (2001). Teaching for understanding with students with disabilities: New directions for research on access to the general education curriculum. Learning Disability Quarterly, 24, 5-13.

Nielson, T., Karpatschof, B., & Kreiner, S. (2007). Regression to the mean effect: When to be concerned and how to correct for it. Nordic Psychology, 59, 231-250.

Partnership for 21st Century Skills. (2008). 21st century skills, education & competitiveness. Retrieved from http://www.p21.org/storage/documents/21st_century_skills_education_and_competitiveness_guide.pdf

Passey, D. (2001). Anytime anywhere learning pilot programme: A Microsoft UK supported laptop project: Learning gains in Year 5 and Year 8 classrooms. Reading, United Kingdom: Microsoft.

Pea, R. D. (2004). The social and technological dimensions of “scaffolding” and related theoretical concepts for learning, education and human activity. The Journal of the Learning Sciences, 13(3), 423-451.

Perkins, D., Crismond, D., Simmons, R., & Unger, C. (1995). Inside understanding. In D. Perkins, J. L. Schwartz, M. West, & M. S. Wiske (Eds.), Software goes to school: Teaching for understanding with new technologies (pp. 70-88). New York, NY: Oxford University Press.

Reigeluth, C. M., & Carr-Chellman, A. (2009). Understanding instructional theory. In C. M. Reigeluth & A. Carr-Chellman (Eds.), Instructional-design theories and models, Volume III: Building a common knowledge base. New York, NY: Routledge.

Rijlaarsdam, G., Braaksma, M., Couzijn, M., Janssen, T., Raedts, M., van Steendam,… & van den Bergh, H. (2008). Observation of peers in learning to write. Journal of Writing Research, 1(1), 53-83.

Rosenblatt, L. M. (1991). Literacy-S.O.S!, Language Arts, 68(6), 444-448.

Roschelle, J., Bakia, M., Toyama, Y., & Patton, C. (2011). Eight issues for learning scientists about education and the economy. Journal of the Learning Sciences, 20(1), 3-49.

Roth, W.-M., McGinn, M. K., Woszczyna, C., & Boutonné, S. (1999). Differential participation during science conversations: The interaction of focal artifacts, social configurations, and physical arrangements. Journal of the Learning Sciences, 8(3/4), 293-347.

Rummel, N., & Spada, H. (2005). Learning to collaborate: An instructional approach to promoting problem-solving in computer-mediated settings. Journal of the Learning Sciences, 14(2), 201-241.

Sawyer, R. K. (2006). Analyzing collaborative discourse. In R. K. Sawyer (Ed.), Cambridge handbook of the learning sciences, (pp. 187-204). New York, NY: Cambridge University Press.

Scardamalia, M. (2004). Instruction, learning, and knowledge building: Harnessing theory, design, and innovation dynamics. Educational Technology & Society, 44(3), 30-33.

Scardamalia, M. (2006). Technology for understanding. In K. Leithwood, P. McAdie, N. Bascia, & A. Rodrigue (Eds.), Teaching for deep understanding: What every educator needs to know (pp. 103-109). Thousand Oaks, CA: Corwin Press.

Scardamalia, M., & Bereiter, C. (2008). Pedagogical biases in educational technologies. Educational Technology, 48(3), 3-11.

Sharples, M., Graber, R., Harrison, C., & Logan, K. (2009). E-Safety and Web2.0 for children aged 11-16. Journal of Computer-Assisted Learning, 25, 70-84.

Turcotte, S. (2008). Computer-supported collaborative inquiry in Remote Networked Schools. (Unpublished doctoral thesis). McGill University, Montreal, QC.

United Nations, Educational, Scientific and Cultural Organization (UNESCO). (2010). ICT transforming education, a Regional Guide. Retrieved from http://unesdoc.unesco.org/images/0018/001892/189216e.pdf

White, T. & Pea, R. (2011). Distributed by design: On the promises and pitfalls of collaborative learning with multiple representations. Journal of the Learning Sciences, 20(3), 489-547.

Wiske, M. S. (1998a). The importance of understanding. In M. S. Wiske (Ed.), Teaching for understanding (pp. 1-9). San Francisco, CA: Jossey-Bass.

Wiske, M. S. (1998b). What is teaching for understanding? In M. S. Wiske (Ed.), Teaching for understanding (pp. 61-86). San Francisco, CA: Jossey-Bass.

Wiske, M. S., Franz, K. R., & Breit, L. (2005). Teaching for understanding with technology. San Francisco, CA: Jossey-Bass.

Zhang, J., Scardamalia, M., Reeve, R., & Messina, R. (2009). Designs for collective cognitive responsibility in knowledge-building communities. Journal of the Learning Sciences, 18, 7-44.

Zhao, Y., & Rop, S. (2001). A critical review of the literature on electronic networks as reflective discourse communities for inservice teachers. Education and Information Technologies, 6(2), 81-94.